Electronic reporting for data migration

- Eugen Glasow

- January 15, 2020

- 10:19 pm

- 5 Comments

Intro

There are still dozens of important tables in Dynamics 365 for Finance and SCM not exposed by any entity. Sometimes simple solutions like my Copy-paste with a keyboard script from Excel don’t work due to the volume or complexity of the data, and sometimes there is no UI at all.

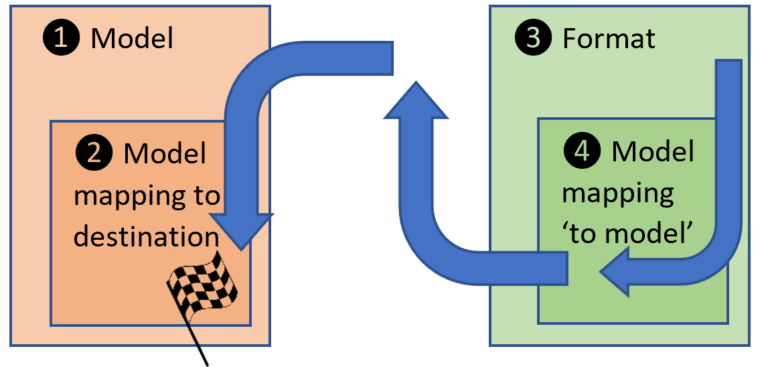

One of the notorious examples is the table EcoResCatalogControl which is associated with product attributes bound to procurement categories, see the Searchable flag in my blog Searchable product attributes. An import of these “Product category attributes” through the entity EcoResProductCategoryAttributeEntity leaves the attributes dysfunctional: the internal table EcoResCategoryAttributeLookup is left out of sync and the new attributes remain invisible in the product master.

The only form where the EcoResCatalogControl is editable and visible is the Procurement category form, but an attempt to update the flag fails with a “Missing reference” error: the system expects a EcoResCatalogControl record to exist already. Dead end.

Electronic reporting: the last resort

Since the table browser is not an option anymore in a sandbox or production environment, the number of tools available to the consultant reduces to just one: Electronic reporting. Despite the name, the Electronic reporting module is able not only to read, but also freely insert, update or delete voluntary tables in D365FO.

In essence, the idea is to make a CSV text file and import it into the EcoResCatalogControl with the help of the Electronic reporting (ER).

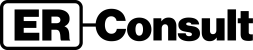

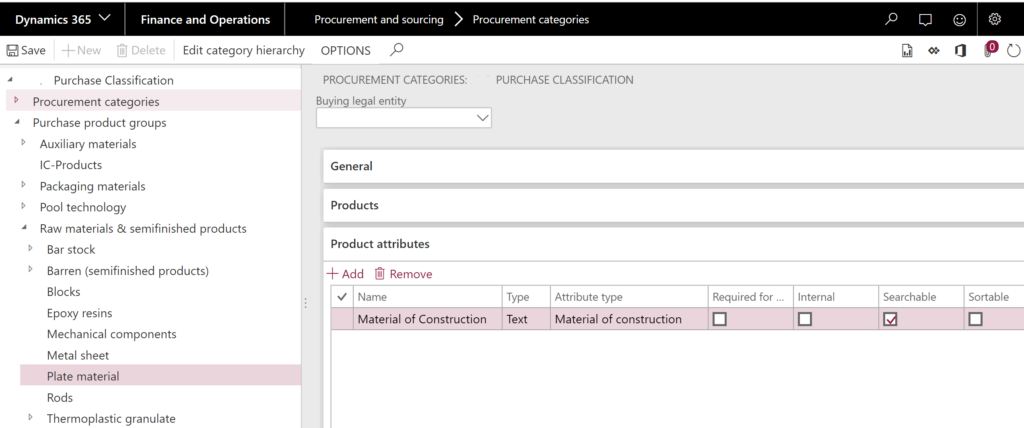

This requires a so-called Mapping to destination where the “destination” is one or many tables or entities in D365FO. The concept is nicely outlined in the blog of Mr. Ties Philippi. In total, 4 “components” are required in at least 2 ER configurations (the Mapping to destination may in theory be detached into a separate – third – configuration).

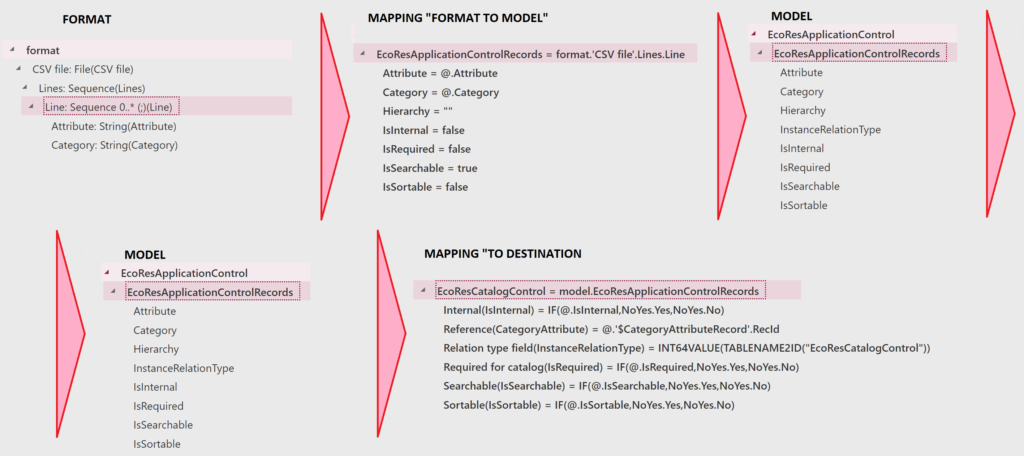

- Model

- Model mapping “To destination”

- [CSV file] Format

- Model mapping [from format] ”To model”

The execution flows in the order (3) -> (4) -> (1) -> (2).

The 2 configurations I used can be downloaded here.

First, create a model, for the simplification its structure may follow exactly the table.

Next, create a mapping with the Direction = To destination. Add the target table to the right with the button Destination, then bind the model from the left to the table at the right side of the Model mapping designer. Change the status of the configuration to Complete.

Next, create a format configuration based on the model. It should describe the CSV file structure, namely a set of records (Lines) separated by CRLF, with 2 fields in every line, separated by the semicolon “;” (in a German version of the Excel; in an English version the comma “,” is more appropriate). The encoding of the CSV format should better be UTF-8 (Excel is able to produce UTF-8 encoded files).

Do not bind the format to the model, but use the button Map format to model to create a mapping of the same name. Bind the format to the model as shown on the screenshot below. The button Run can be used to test the mapping ad hoc: it takes a CSV file, interprets it according to the format definition, translates to the model internal container and exports this container to an XML file.

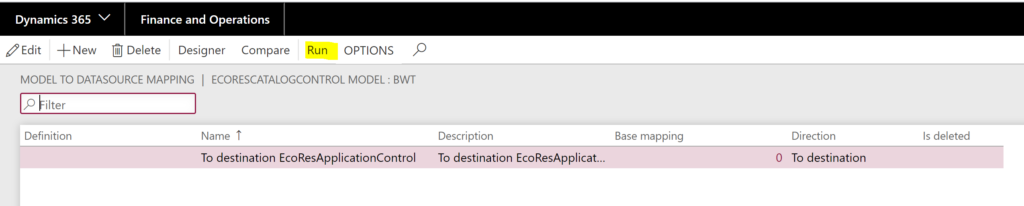

Once tested, update the format status to Completed. Make note that the import is started from the artefact (2) Model mapping to destination (with the button Run). The system looks for a format based on the model the mapping belongs to, and inside of that format it looks for a “To model” mapping.

Having found an appropriate format, the system asks for a CSV file and unleashes the import, there is no way back.

Update route

Added on 31.01.2020

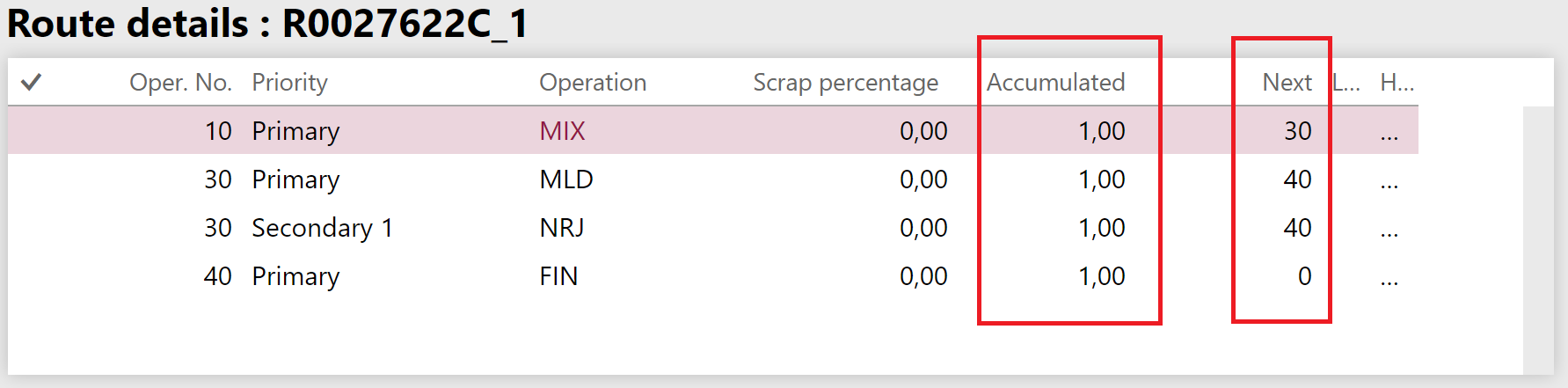

Electronic reporting destination mappings can also be used to launch X++ code. If you have ever worked with production routes, you may have noted the Update route button. The class behind is called RouteUpdate and it is executed by the system automatically from the UI on any change in the order of operations. On plain production routes, it updates the Next operation numbers, and multiplies the variable scrap percentages to cache and store accumulated scrap factors. The problem is, this class cannot be run over all routes in the batch mode; in the Route headers entity they forgot to use it. Opening every single route out of thousands imported is obviously not an option.

I took the same CSV file and created a new destination mapping which updates the RouteTable. One column in the CSV file (Attribute) contains the key RouteId, the other (Category) bears the route name. I am updating the name by the key, but in the middle I am injecting the following codeRouteSearch.newRouteId(@.Attribute).routeId

like thisLEFT(RouteSearch.newRouteId(@.Attribute).routeId,0)&@.Category

It instantiates and executes the RouteSearch class which as a by-product calls RouteUpdate in its constructor method, then gives a routeId() value back which I neglect. Thousands of routes are updated blazing fast, and the Next operation and the Accumulated scrap is set everywhere:

The ER configuration can be downloaded here: RouteUpdateMapping.zip.

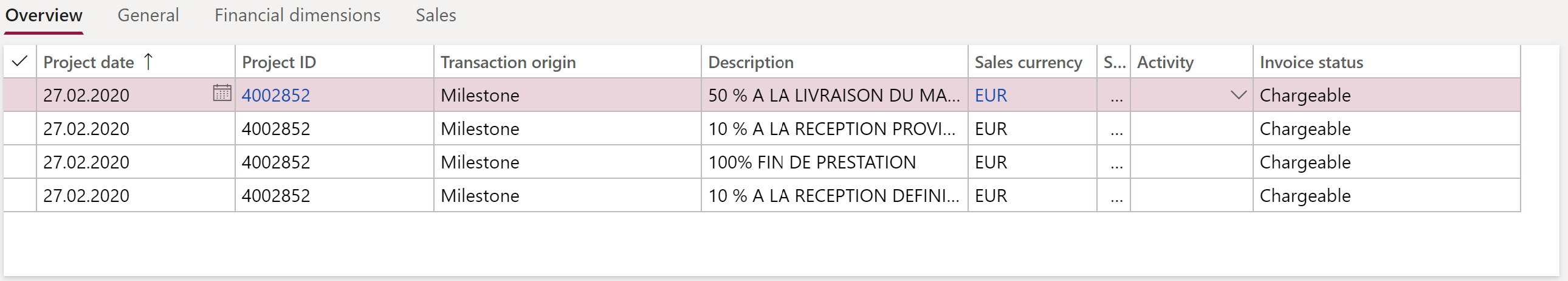

Open project milestones

Added on 16.04.2020

Finally, I have designed a data model and mappings for the fixed fee project On-account transactions aka Billing milestones. The configuration may take a project TransId from the CSV file, or it may create its own from the standard number sequence in the Project module parameters. Both the related tables ProjOnAccTrans + ProjOnAccTransSale are generated.

The ER configuration can be downloaded here: ProjOnAccTransModel.zip

Fun fact: a DMF entity for this existed in AX2012, but not D365.

Epilogue

The above crude trick works, but it is dangerous. Fixing a broken import might be a challenge, because to delete the data and start all over you need to change the destination Record action to Delete and then hope to find the right record by the primary key from the CSV file.

I would love to use standard entities, and make Microsoft add them to the application. Please vote for my 2 “favourites”:

- the management of EcoResCatalogControl in an entity and…

- the open (not invoiced) project milestones ProjOnAccTrans + ProjOnAccTransSale.

Electronic reporting blog series

Further reading:

ZUGFeRD 2.1/ Factur-X 1.0 for Dynamics 365 FO

Enumerate lines in Configurable Business Documents

D365 Electronic Reporting: JOIN records in pairs

Canvas sections in D365 Configurable Business Documents

Electronic Reporting (ER) Cookbook 4: References in a model

Electronic reporting for data migration

Electronic Reporting (ER) Cookbook 3: Working with dates

Electronic Reporting (ER) Cookbook 2: new tips from the kitchen

Electronic Reporting (ER) Cookbook