Troubleshoot the VAT Declaration in D365 for Finance

Users of the VAT declarations (both Excel and XML) in the financial department are often shocked by error messages of this kind:

- Evaluating binding of format component Excel/Sheet1/I_Turnover/Box_200

- Fehler beim Bewerten des Ausdrucks für Pfad ‚Boxes/Box81_Base‘

- Fehler beim Bewerten des Ausdrucks für Pfad ‘Boxes/Box_150’.

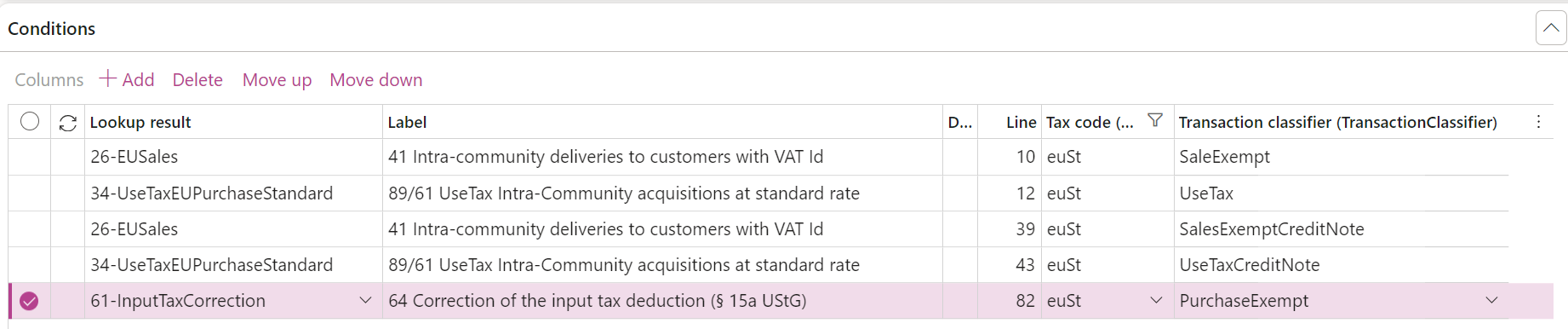

The exact error message differs by country: the first is produced by the “VAT Declaration Excel (CH)” / “VAT Declaration XML (CH)” for Switzerland, the second one is from Germany, the 3rd is from Spain. In reality, the error has nothing to do with the “Boxes” i.e. the Excel layout but a clear sign that the Application specific parameters / Setup fail to classify a specific VAT use case. Namely, a VAT code was used in a module / situation not envisioned by the FiCo functional consultant who configured the VAT Declaration.

Update 09.06.2025: meanwhile, the recent versions of VAT declarations in Electronic reporting give an exact hint which code and tax direction fell through the grate, for example: “…(Code): „ESIN0“, … (TransactionClassifier): „Purchase“…”, and the below cumbersome algorithm of finding the date, code, and the transaction classifier became obsolete.

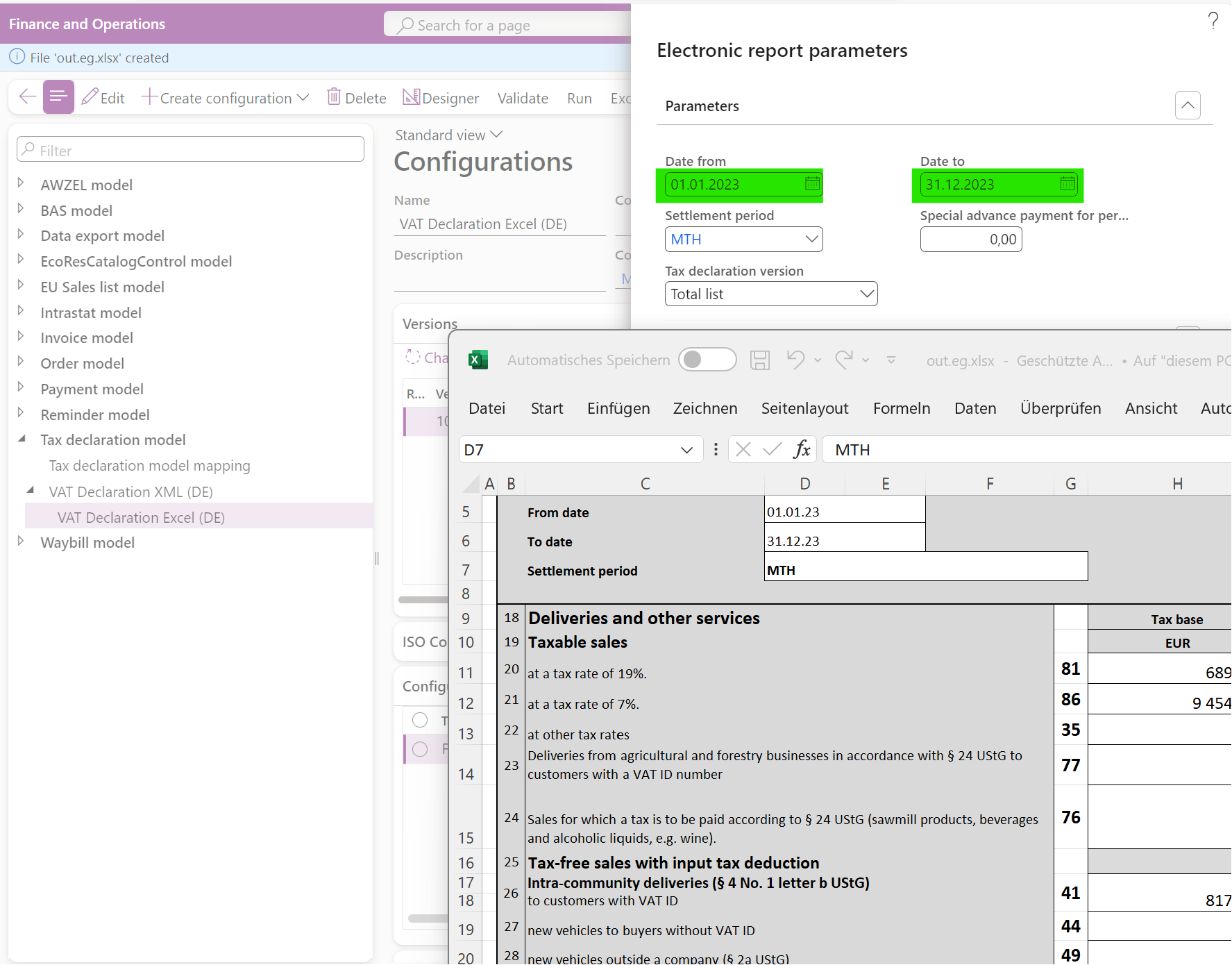

1. Use division by dichotomy to find the exact date

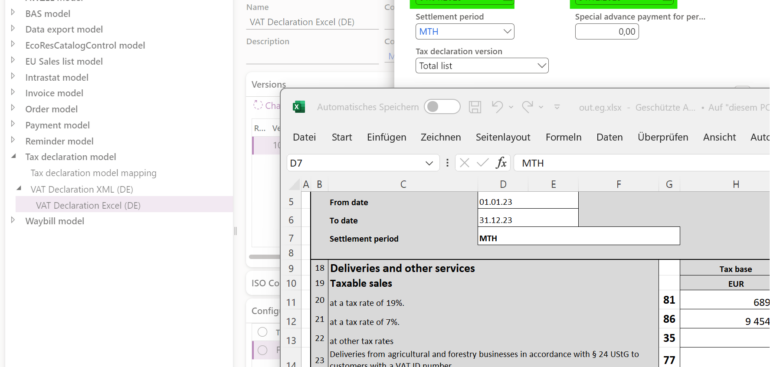

… when it happened. Most of the European VAT declarations are returned monthly, and it takes on average 7 attempts to narrow down the date. Locate the VAT declaration in the Organisation administration > Workspaces > Electronic reporting, Reporting configurations tree under the Tax declaration model node. Keep starting the report with the Run button above, variate the dates.

2. Find uncommon combinations of VAT code and Direction

Having spotted the date, filter tax transactions by that date and the VAT settlement period using the query Tax > Inquiries and reports > VAT inquiries > Posted VAT (hereafter I refer to the en-gb language of the D365 UI). Export the results into Excel. Build a pivot table by the VAT code and direction, counting the vouchers (= business cases) at the cross sections. Start looking for outliers, pay particular attention to the low counts i.e. seldom use cases. Often the mistake is obvious:

| VAT code | Duty-free purchase | Duty-free sale | Use tax | VAT payable | VAT receivable |

|---|---|---|---|---|---|

| euSt | 1 | 7 | 1 | ||

| exSt | 7 | ||||

| FoodR | 15 | ||||

| R | 2 | 5 | |||

| ServSt | 11 | ||||

| St | 39 |

In this example, the euSt code designates VAT exempt intra-community sales, or intra-community acquisitions at the standard tax rate of 19% (= “use tax” or Import VAT / purchase VAT in en-gb, see Minimalistic EU VAT Configuration in Dynamics 365). This code is not supposed to appear in the context of a VAT free purchase, but someone managed to make such a transaction in a general ledger journal when entering a EU supplier invoice.

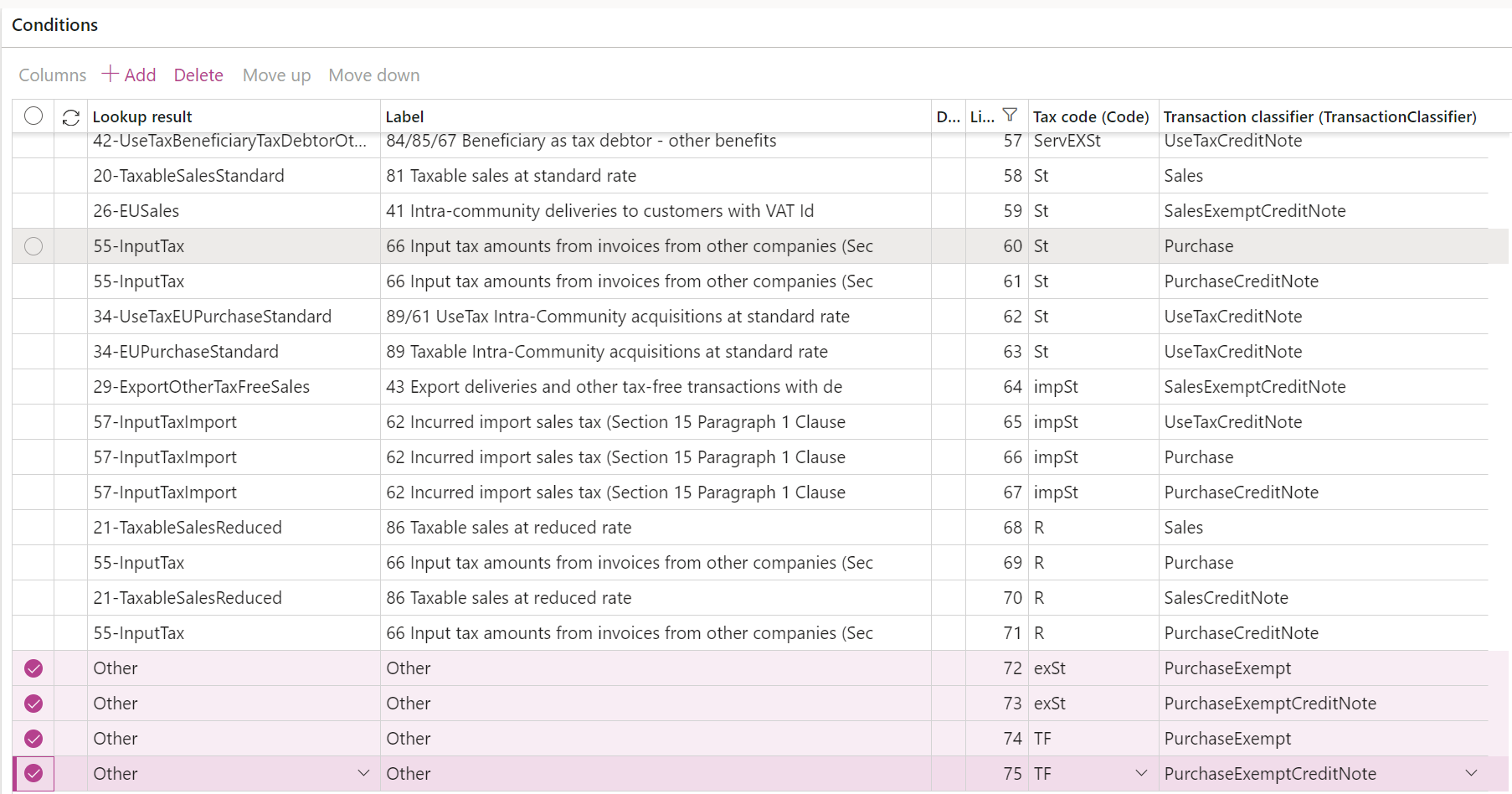

You cannot fix the tax transactions, but the Application specific parameters may be amended to account for this irregularity. Open this list of Conditions and filter it by the tax code in question to validate the suspicion:

You have to add a new line with this combination of the Tax code and the Transaction classifier to capture this exceptional case. Namely, a pair euSt–PurchaseExempt must be added. Try the VAT declaration one more time to confirm that the problem is now gone.

3. Use a fallback condition

Sometimes it is not that easy to spot a pattern: the occurrences may be too many even on a single day. Your next approach may be to leverage the placeholders *Not blank* for the Tax code and *Not blank* for the Transaction classifier. These lines must be placed at the very bottom of the list of conditions (note the Move down button).

Use these fallback lines with placeholders to redirect the total tax amount of the questionable nature into a separate VAT declaration cell which is typically empty, for example to the cell 61-InputTaxCorrection on the German “UVA” tax declaration. This will give you an idea how much is missing in tax, and what the tax origin amount may be.

Do not keep the placeholders in your PROD setup for long: by doing so, you are resigning and testifying that you don’t really know what is happening in the tax ledger but play dice with the tax authority, which is a very bad idea.

You may also have some tax transactions that do not need to be reported to the tax authority at all. Capture such a case with a combination of a VAT code and direction, and send it into the Other cell i.e. into oblivion:

4. If nothing helps

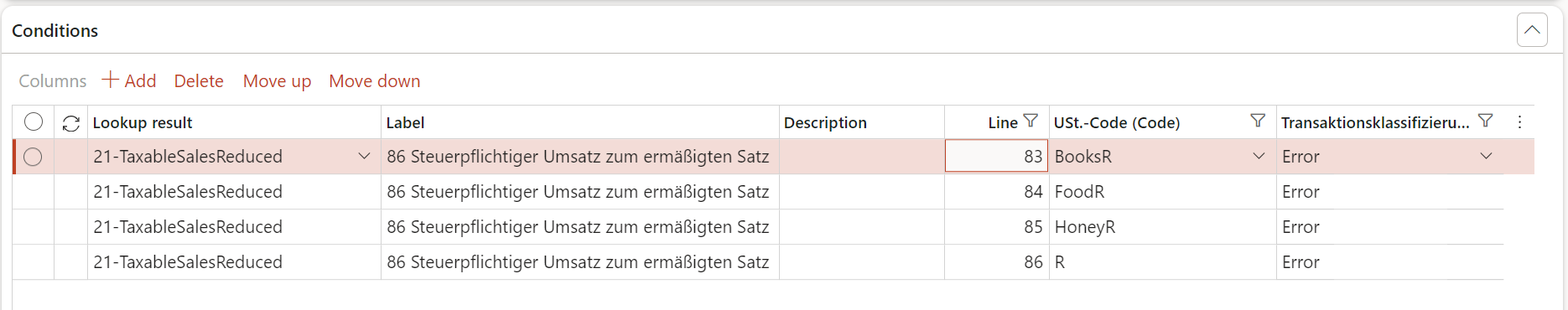

…then you may have encountered a true bug in the VAT declaration, a case that does not fit into any of the Transaction classifier categories but an Error one!

Indeed, recently we discovered that the reversal of a customer transaction settlement with a realised cash discount is accompanied by a positive adjustment of the VAT payable (i.e. we again own more VAT to the state, which is correct). But this adjustment is not attributed to the VAT direction of Sales, it results in an Error in the VAT declaration instead.

Add lines with the explicit Transaction classifier = Error to manage this case:

Blog series on Finance and VAT in the EU

Further reading:

Luxembourg VAT Declaration for D365FO in PDF

Troubleshoot the VAT Declaration in D365 for Finance

D365 Petty cash review

Advance payment invoices in Fixed fee projects in D365, D-A-CH style

Sometimes you pay Reverse Charge in D365

Minimalistic EU VAT Configuration in Dynamics 365

Austrian VAT declaration / Umsatzsteuervoranmeldung 2020

EU Tax directives