Enumerate lines in Configurable Business Documents

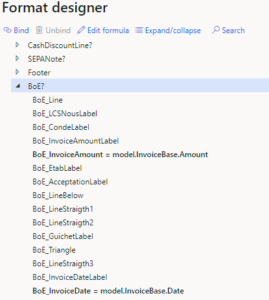

The delivery note, invoice etc. Report Data Providers in Dynamics 365 for Finance do not expose line numbers for no obvious reason. For instance, the SalesInvoiceTmp table sent from D365 to the report renderer – whatever it is – misses the line number. The situation becomes dire when it comes to Electronic Reporting / Configurable Business Documents.

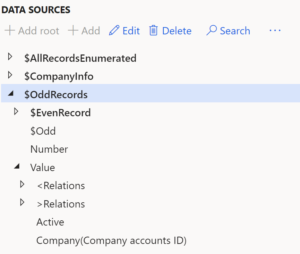

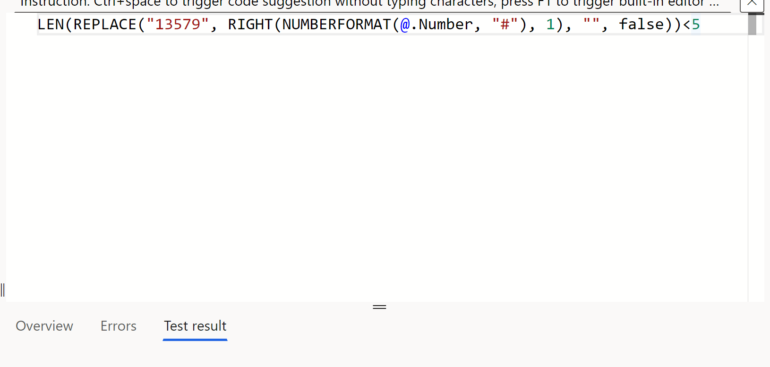

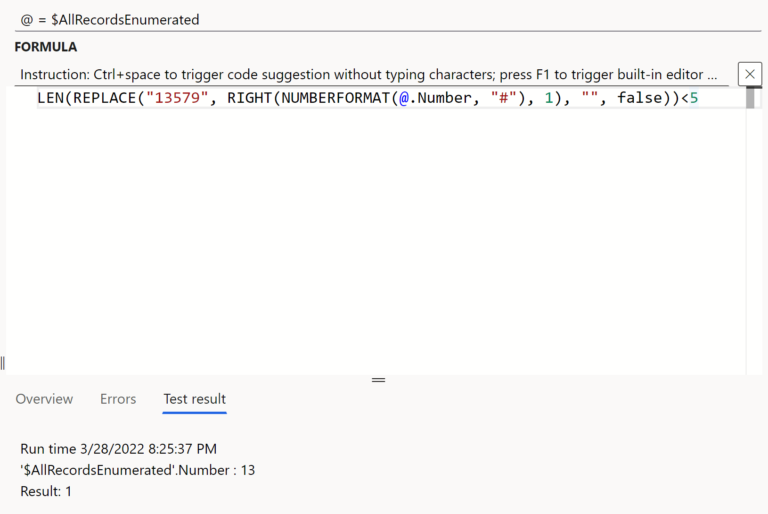

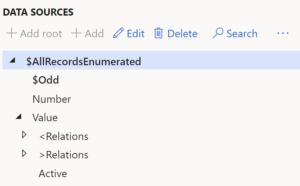

The ENUMERATE(Lines) function applied to the lines makes a totally new object {Number, Value} and every binding “@” becomes “@.Value“. This is a massive change in the report, very hard to maintain and upgrade (rebase).

In XML or CSV outbound formats there is a Counter element, it auto-counts itself. In Excel and Word outbound formats there are just Ranges, Cells. I thought we worked with lists as isolated objects and there were no internal variables in Electronic Reporting which were aware of the execution history.

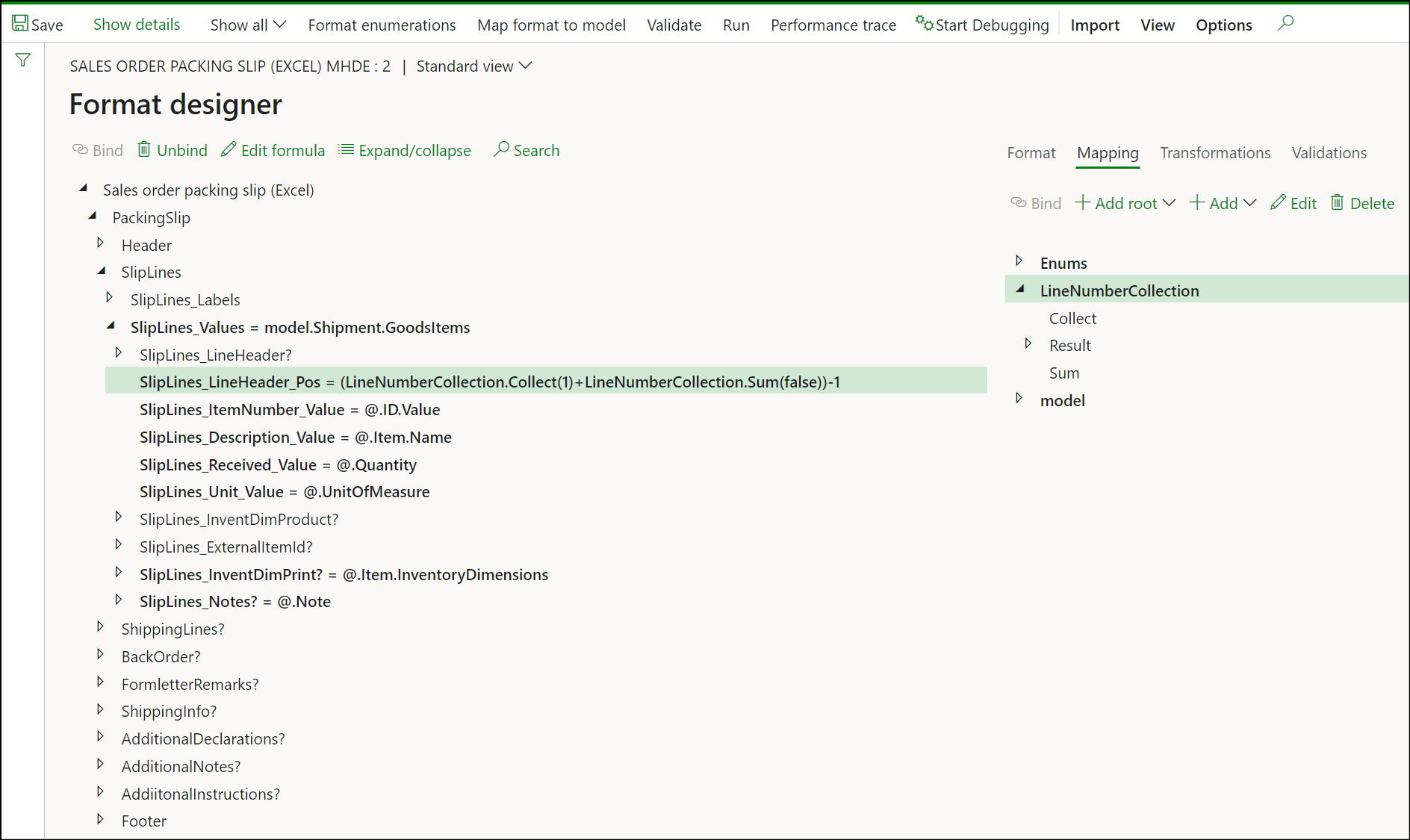

But today I finally understood what the DATA COLLECTION does. It is a listener, you pass a value to it, it returns it back unchanged but keeps a global variable to count and summarise the values.

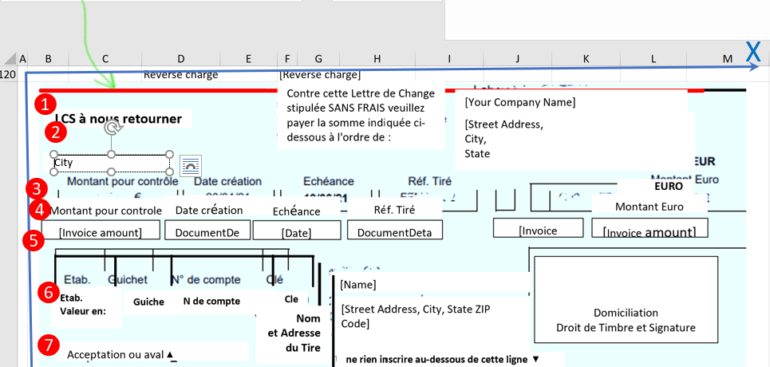

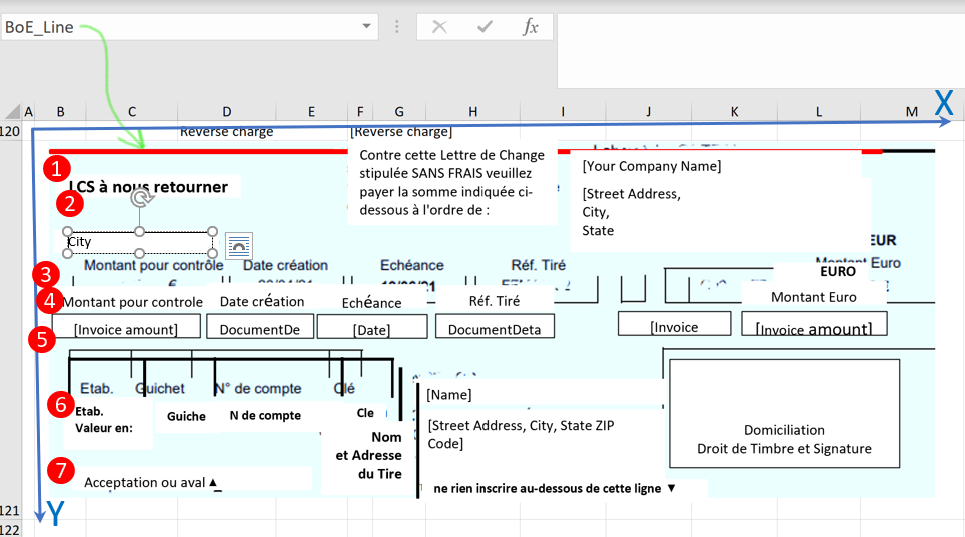

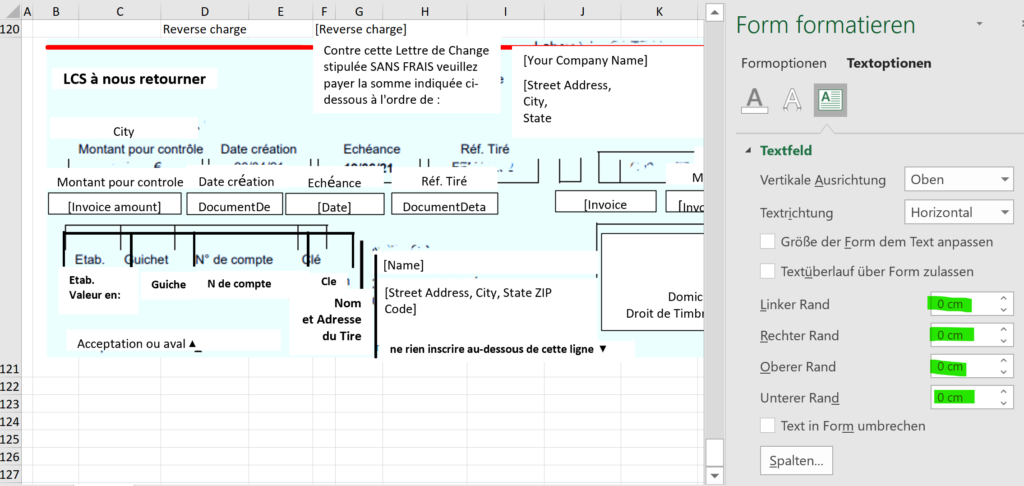

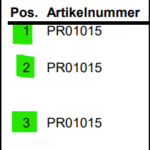

For example, to enumerate delivery note (en-us: packing slip) lines, first create a root Data collection class of the integer type in the Mapping to the right, Collect all values = Yes, let’s call it a LineNumberCollection.

Then put the following formula into the Excel Cell with the line number:LineNumberCollection.Collect(1)+LineNumberCollection.Sum(false)-1

The first LineNumberCollection.Collect() call takes 1, records 1 internally, and returns the 1 back into the cell. The second call LineNumberCollection.Sum(false) adds the running total of 1’es recorded so far. The last operand subtracts 1.

Electronic reporting blog series

Further reading:

Customer invoice falsifier for D365 for Finance

Amend GDPdU = GoBD = FEC

Z4-Meldung an Bundesbank

Enumerate lines in Configurable Business Documents

D365 Electronic Reporting: JOIN records in pairs

Electronic Reporting (ER) Cookbook 4: References in a model

Electronic reporting for data migration

Electronic Reporting (ER) Cookbook 3: Working with dates

Electronic Reporting (ER) Cookbook 2: new tips from the kitchen

Electronic Reporting (ER) Cookbook

Add another Calculated field at the row level to check if the current record has an odd or an even row number:

Add another Calculated field at the row level to check if the current record has an odd or an even row number: