Picking list journal: Inventory dimension Location must be specified

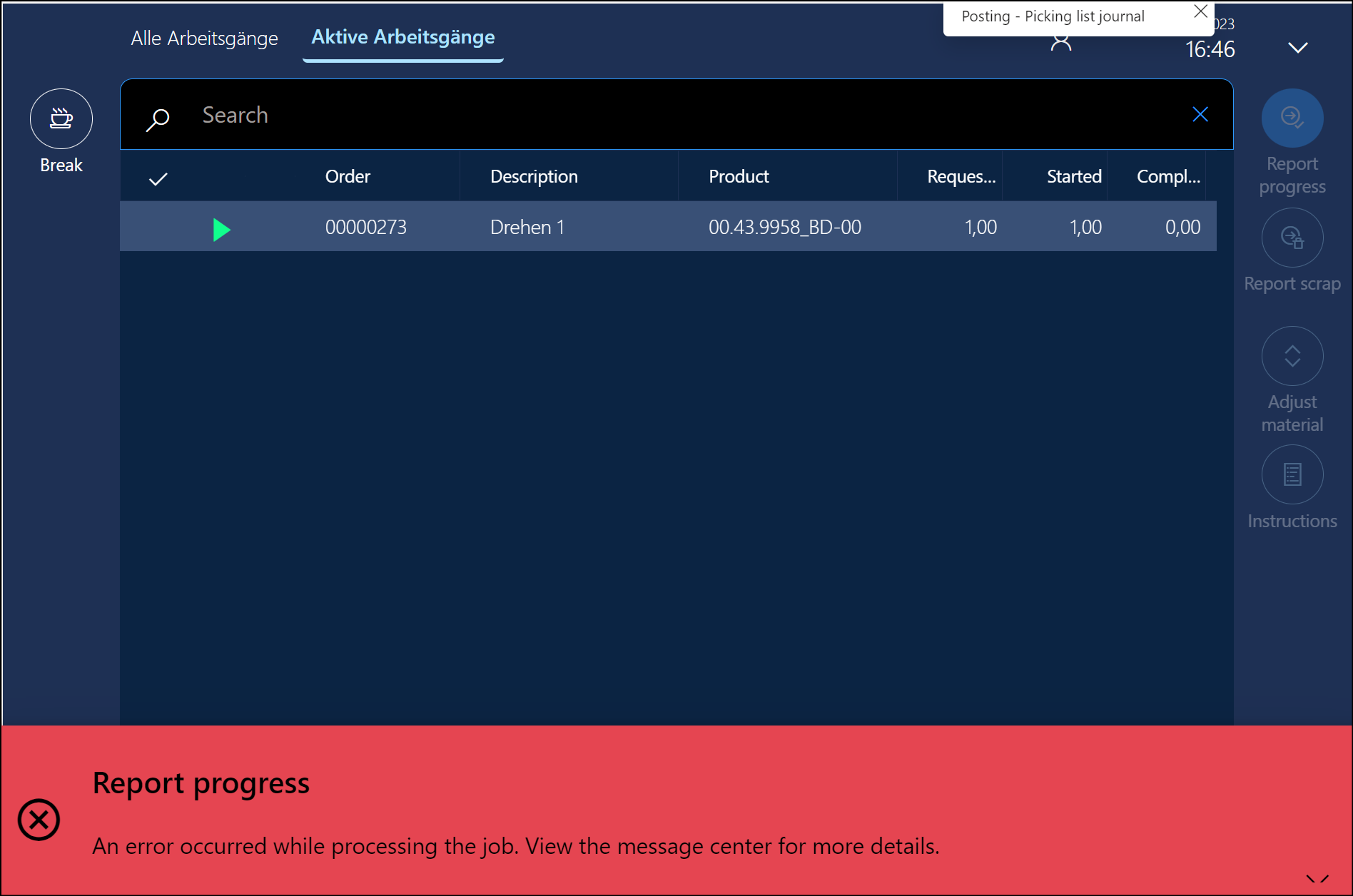

One – if not the – notorious error in Dynamics 365 related to production and warehouse management manifests itself at the “Production floor execution” terminal during reporting as finished (RaF). On the screen, the error message “An error occurred while processing the job…” is displayed.

In the activity center, you receive the following detailed error message:

- Posting – Picking list journal

- Inventory dimension Location must be specified.

This is due to the failed material withdrawal following the Finish backflushing principle (actual quantity = planned quantity, see The case of a missing flushing principle). The causes are diverse, with the most common being an incomplete warehouse work. This means that the materials haven’t been brought to the machine’s input location, yet someone is trying to report the finished or semi-finished product as complete.

This error pops up very often during the testing phase of the Dynamics 365 implementation. You may say: “Hey, let them finish the picking work first; this may never happen in real life, since the materials are going to be missing for the assembly! The sequence of production steps must be adhered to!”

It is not that simple. I’d say we are talking about a significant design flaw in the Dynamics 365 for SCM system.

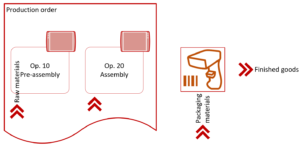

Let’s conduct a thought experiment. In an MTS (make-to-stock) scenario, imagine 3 production orders planned for the same product on the same day. All of them get released at once early in the morning, all of them produce warehouse work for the picking of the same raw material. If they are not consolidated into one production wave, it may happen that the warehouse work for the PO3 has been completed much earlier than the warehouse work for the PO1, but the PO1 is started and finished earlier. There is namely no particular order or priority in the warehouse work. The material is going to be at the inbound location of the work centre, the machine operator does not care if it’s for the current or for the next order. Yet reporting the progress at the terminal fails due to the mis-allocation of the material.

Explanation

Presuming the Automatic BOM consumption parameter in Production control > Setup > Manufacturing execution > Production order defaults is set to Flushing principle, and the Post automatically is set to Yes, then the material consumption becomes an integral part of every RaF transaction at the Production floor execution terminal. If the picking list posting fails, the whole transaction is rolled back:

In reality, it is even worse than a clean rollback. On each failed attempt of Progress reporting, a new picking list journal is created. The mere presence of such a picking list prevents any further attempts because the open line(s) in the picking list journal block a portion of the bill of materials through a TransChildRefId identifier – a relationship between the journal line and the BOM line.

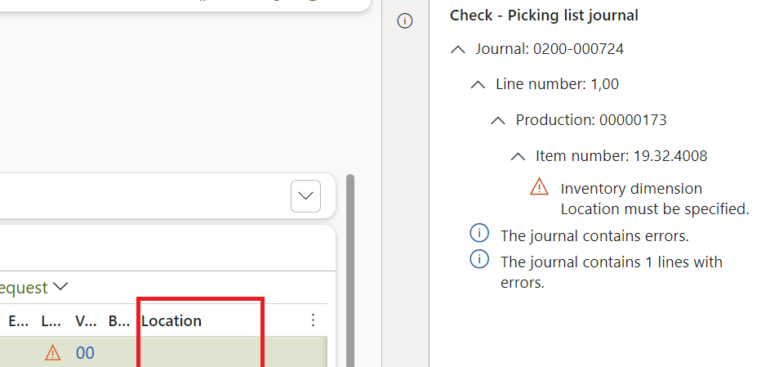

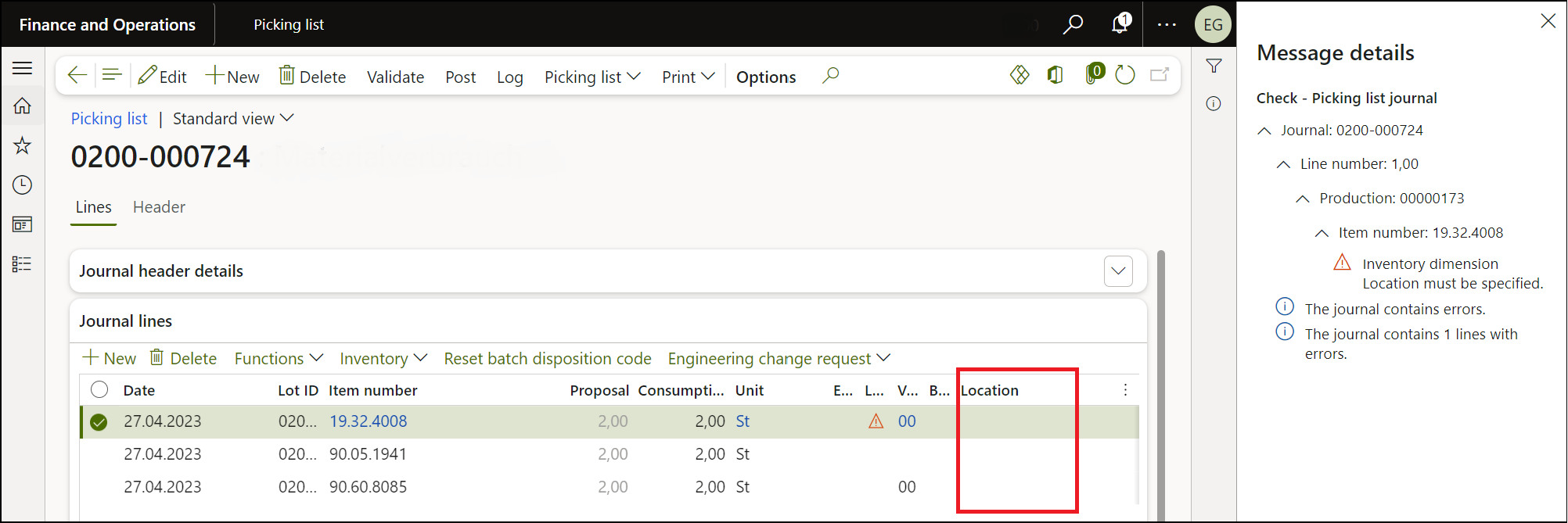

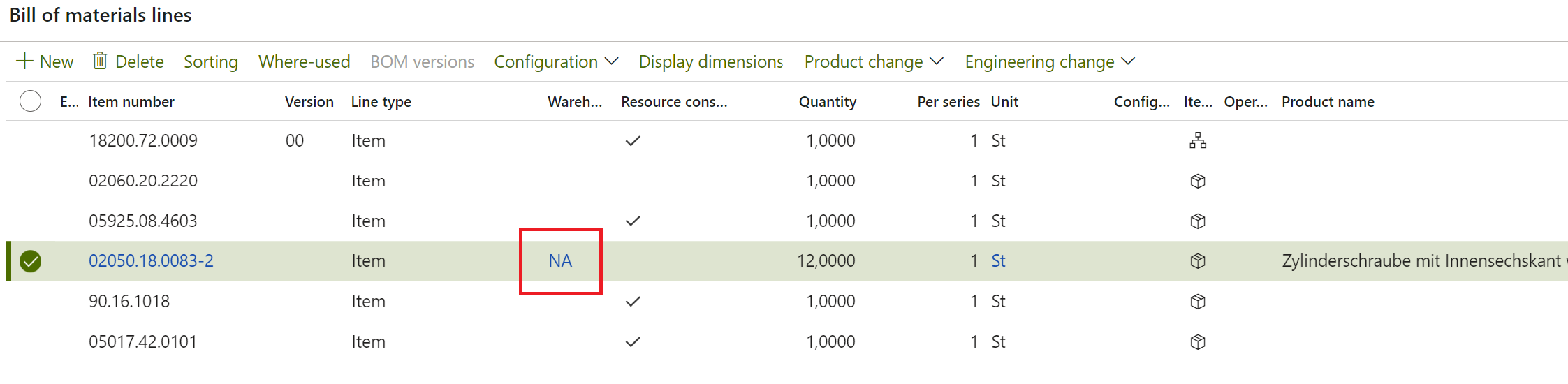

With the advanced Warehouse management in Dynamics turned on, the warehouse locations in the master BOM must remain empty. They are allocated during the Release or Release to warehouse phase in Production. If the Resource consumption checkbox is set in every BOM line, then the input location of the work centre is evaluated by the Warehouse management module at the run time as the picking list journal lines are getting created. While doing do, for some reason they are looking for the location in the picking warehouse work. If the particular inbound location is not reserved there in the InventTrans, or the work is not closed yet, the Location dimension in the picking journal is going to be empty:

Troubleshooting

The troubleshooting steps are as follows:

- First and foremost, search for any open picking lists for the production order with the button View / Journals / Picking List.

- Alternatively, use the list of all open picking journals in the menu Production control > Adjustments > Picking List using the order number. This list should actually be empty, meaning all picking lists should have been posted.

- Select the open (not posted) picking list journals and delete them all at once.

- Look for any open warehouse work for production, meaning the warehouse work for materials picking: Pick at the main warehouse, Put to the inbound location of the machine. Use the button Warehouse / General / Work details at the production order.

- If there is open work, ensure it is processed on the mobile device.

- If there is no open warehouse work for the production order, initiate the warehouse picking process again using the button Release to warehouse. A new production wave will be generated, and new warehouse work will be created in the background. If the materials and parts are already at the machine’s location, this warehouse work will automatically be closed, and the materials will be reserved at that location for the production order. The next picking list will have the correct location in all lines, and posting this entry will likely work.

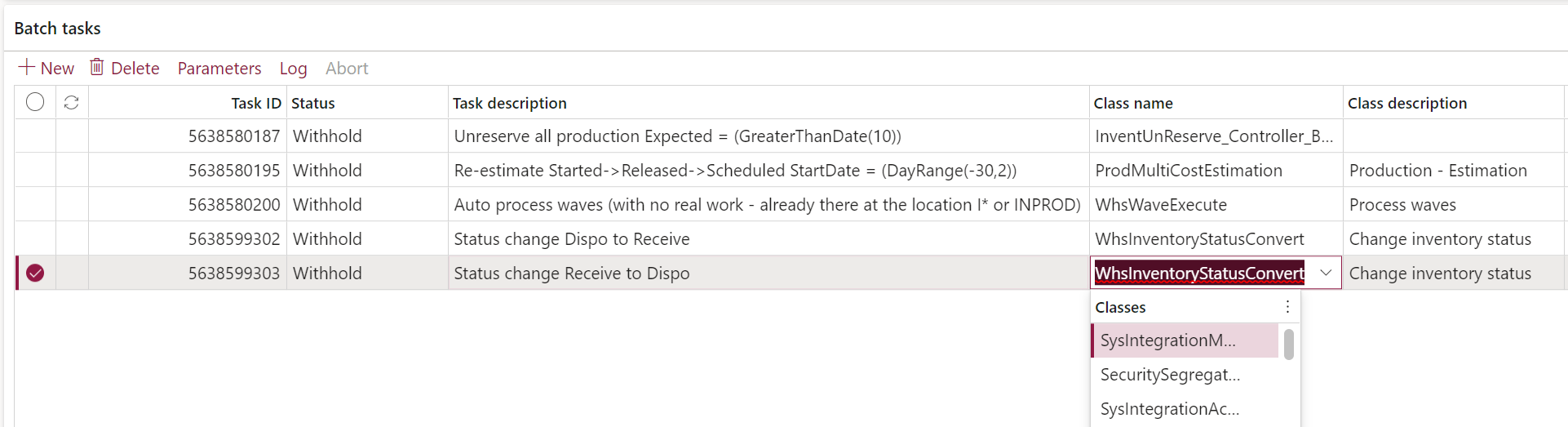

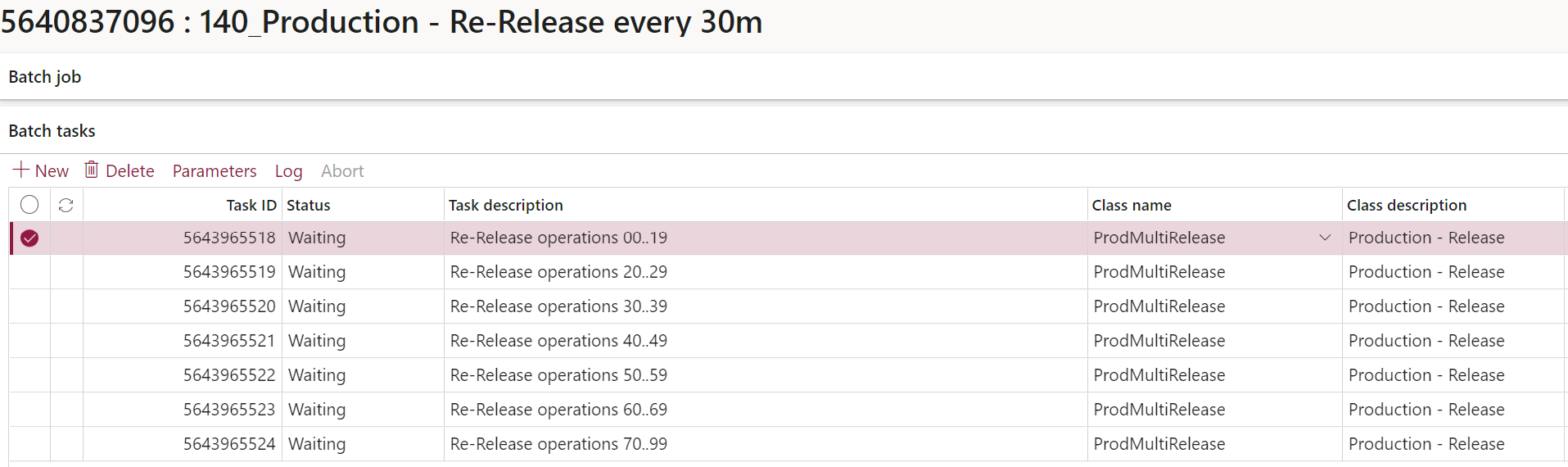

- This deals with the racing condition in the above thought experiment, too: the material already found at the location will be rearranged to the current order. However, any open warehouse work for the current order shall be cancelled first. This re-release of released orders may also be automated.

- If the report as finished continues to fail at the terminal, the operator may forcefully reduce the quantity using the Adjust material button: click on Adjust material, enter a zero. Repeat this process for all BOM lines. Then confirm the Adjust material screen with OK. Reporting as finished should work now, while the excess material can be eliminated in a manual picking list journal.

If the error happens often, some drastic measures may need to be taken. The point in time for the consumption of materials must namely be changed to At location for most materials and parts. This de-couples the material backflushing from the Report as finished feedback.

Production and Manufacturing blog series

Further reading:

Assistance and Secondary operations in D365 for SCM

Integrate APS with Dynamics 365 for SCM

D365 Mass de-reservation utility

Picking list journal: Inventory dimension Location must be specified

Consumable “Kanban” parts in D365 Warehouse management

Subcontracting with Warehouse management Part 2

Subcontracting with Warehouse management Part 1

Semi-finished goods in an advanced warehouse

The case of a missing flushing principle